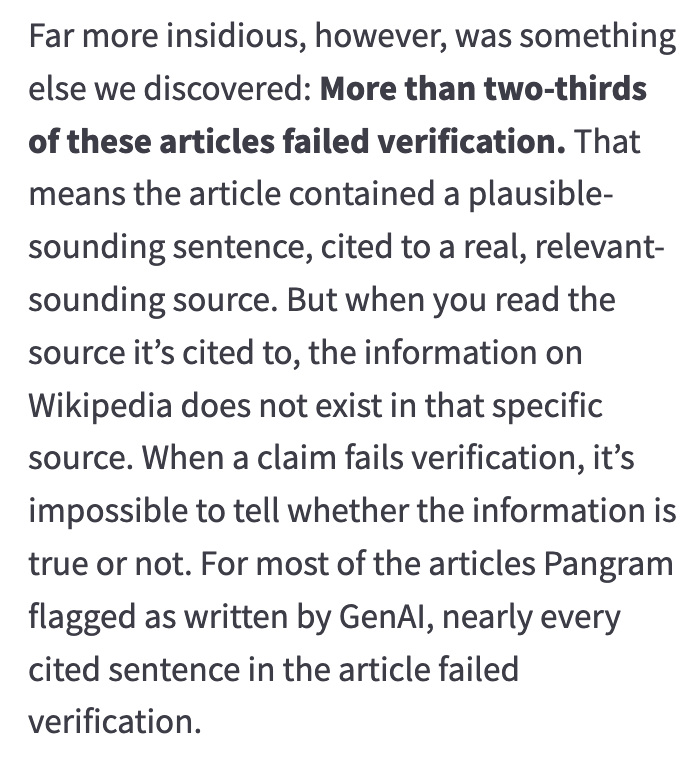

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

@shafik this aligns with my experience, where an AI "summary" will turn up a useful reference, but the reference does not corroborate the summary.

-

if the editors can not keep up, then yes.

-

@shafik this aligns with my experience, where an AI "summary" will turn up a useful reference, but the reference does not corroborate the summary.

That is how I use the summaries, to obtain vocabulary and references and I tend to ignore the content as once I know the vocabulary and good source.

I can just read those and no have to verify the summary, which you always will need to do.

-

You're describing my freshman class from the fall semester! We had to add in two additional lectures to help them grasp citations and why using AI for them is wrong at least 50% of the time.

Thank you for teaching people that.

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

Ok this has left my network.

I am better known for my C++ quizzes:

https://hachyderm.io/tags/Cpppolls

and my cursed code:

https://hachyderm.io/search?q=from%3Ashafik+cursed+code&type=statuses

Also my dad jokes but I don't have handy reference for those, so you will have to just follow if you like dad jokes.

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

@shafik Welcome to the life of every University lecturer marking essays right now.

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

@shafik

Interesting!

“Our early interventions with participants who were flagged as using generative AI for exercises that would not enter mainspace seemed to head off their future use of generative AI. We supported 6,357 new editors in fall 2025, and only 217 of them (or 3%) had multiple AI alerts. Only 5% of the participants we supported had mainspace AI alerts. That means thousands of participants successfully edited Wikipedia without using generative AI to draft their content.” -

@shafik Welcome to the life of every University lecturer marking essays right now.

I am sorry

-

@shafik

Interesting!

“Our early interventions with participants who were flagged as using generative AI for exercises that would not enter mainspace seemed to head off their future use of generative AI. We supported 6,357 new editors in fall 2025, and only 217 of them (or 3%) had multiple AI alerts. Only 5% of the participants we supported had mainspace AI alerts. That means thousands of participants successfully edited Wikipedia without using generative AI to draft their content.”Yes, education definitely helps. There have been several reports that if people using LLMs in a way that makes their flaws obvious they learn and use them in more appropriate ways.

If they can keep up, it should not be a big problem. Sounds like they have a hold of it.

-

For sure, tools are useful when you use them appropriately.

-

if the editors can not keep up, then yes.

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

@shafik Yes. Indeed.

Today I opened Claude to try to find a reference for something I know is true, but is not original with me, to cite in a paper I am writing.

The first answer was a proof, which (in this particular case) was correct.

But then I told it that I didn't want a proof, only a reference to cite. I had told it in advance that I already knew it is true.

So it gave me a reference. When I looked at it, there was nothing in there stating or proving what I wanted.

So I complained and I got an "apology" (I am not sure machines can or are even entitled to apologize - at best, they should apologize on behalf of their creators).

Then it tried again, and it again gave me a reference that didn't have what I wanted.

The third time I tried, it said it gave up, that what I wanted is nowhere to be found in the literature. But this is wrong. I've seen it before, I know it is true because I can prove it (and Claude itself can prove it (correctly this time), but course not out of nothing).

Don't ever trust a reference given by genAI unless you check it yourself. The references I got after explicitly asking for a reference, and nothing else, didn't have what I asked for.

The machine just makes things up in a probabilistic way. When it starts "apologizing" then you can know for sure that it is rather unlikely that you will get anything useful from it.

Even more concerning is if it doesn't apologize. You may suppose that the answer is right and use it for whatever purpose you had in mind. Good luck with that.

-

@shafik Yes. Indeed.

Today I opened Claude to try to find a reference for something I know is true, but is not original with me, to cite in a paper I am writing.

The first answer was a proof, which (in this particular case) was correct.

But then I told it that I didn't want a proof, only a reference to cite. I had told it in advance that I already knew it is true.

So it gave me a reference. When I looked at it, there was nothing in there stating or proving what I wanted.

So I complained and I got an "apology" (I am not sure machines can or are even entitled to apologize - at best, they should apologize on behalf of their creators).

Then it tried again, and it again gave me a reference that didn't have what I wanted.

The third time I tried, it said it gave up, that what I wanted is nowhere to be found in the literature. But this is wrong. I've seen it before, I know it is true because I can prove it (and Claude itself can prove it (correctly this time), but course not out of nothing).

Don't ever trust a reference given by genAI unless you check it yourself. The references I got after explicitly asking for a reference, and nothing else, didn't have what I asked for.

The machine just makes things up in a probabilistic way. When it starts "apologizing" then you can know for sure that it is rather unlikely that you will get anything useful from it.

Even more concerning is if it doesn't apologize. You may suppose that the answer is right and use it for whatever purpose you had in mind. Good luck with that.

@MartinEscardo @shafik google Gemini can be browbeaten into an apology and then will go right back and do the same shit over again exactly.

-

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

@shafik This certainly mirrors my personal experience (and why I'll keep calling them lie-bots) where all these tools added "citations" and "links" (in Google, Bing, etc "AI summary") but it's not "real" because it's just looking for a plausible real link not one that actually says what it has imagined (as a summary).

If you want specific lists of things, you can get all sorts of links attached (the lists are wrong) or just the weather (link will usually contradict the claim). -

This is a damning article from the Wikipedia editors on GenAI articles written for Wikipedia: https://wikiedu.org/blog/2026/01/29/generative-ai-and-wikipedia-editing-what-we-learned-in-2025/

@shafik the few times I've looked up references on Wikipedia, the experience was similar and I don't think this was related to LLMs having written the thing I wanted to learn more about but turned out to not be mentioned at all in the alleged source

A comparative study might be better than a blanket "it's frequently wrong". Of course I expect LLMs to perform worse than humans but the context would be helpful to put the numbers into perspective

-

@shafik Yes. Indeed.

Today I opened Claude to try to find a reference for something I know is true, but is not original with me, to cite in a paper I am writing.

The first answer was a proof, which (in this particular case) was correct.

But then I told it that I didn't want a proof, only a reference to cite. I had told it in advance that I already knew it is true.

So it gave me a reference. When I looked at it, there was nothing in there stating or proving what I wanted.

So I complained and I got an "apology" (I am not sure machines can or are even entitled to apologize - at best, they should apologize on behalf of their creators).

Then it tried again, and it again gave me a reference that didn't have what I wanted.

The third time I tried, it said it gave up, that what I wanted is nowhere to be found in the literature. But this is wrong. I've seen it before, I know it is true because I can prove it (and Claude itself can prove it (correctly this time), but course not out of nothing).

Don't ever trust a reference given by genAI unless you check it yourself. The references I got after explicitly asking for a reference, and nothing else, didn't have what I asked for.

The machine just makes things up in a probabilistic way. When it starts "apologizing" then you can know for sure that it is rather unlikely that you will get anything useful from it.

Even more concerning is if it doesn't apologize. You may suppose that the answer is right and use it for whatever purpose you had in mind. Good luck with that.

@MartinEscardo @shafik “The machine just makes things up in a probabilistic way.”

Well said!

At the risk of giving a machine human character, I find it useful to think of AI as a charming liar.

-

R ActivityRelay shared this topic