If you use AI-generated code, you currently cannot claim copyright on it in the US.

-

@Azuaron @JeffGrigg @jamie @fsinn @pluralistic For a start, you bought the book. I doubt AI hyperscalers have met that minimum requirement. Secondly, you buy the book for your private use, not for commercial purposes. Thirdly, you describe reproduction for private purposes. Reproduce and sell, and you infringe. Fourth, you don’t use the book to instruct a machine to paraphrase the content, produce quotes and false quotes, and to write in the style of the author in an infinite number of cases.

@christianschwaegerl @JeffGrigg @jamie @fsinn @pluralistic You're making a bunch of different arguments now. The topic at hand was, "Is it copyright infringement to make and have an AI model trained on millions of books?" The answer is no. This is wholly legal.

Storing copyrighted work is legal.

Modifying copyrighted work is legal.

Storing modified copyrighted work is legal.

It doesn't matter if they have a model that is literally just plain text of every book, or if the model is a series of mathematical weights that go into an algorithm. It's already legal to have and modify copyrighted works.

What becomes illegal is reproducing and distributing copyrighted material.

No, whether it was for "commercial" or "non-commercial" purposes doesn't matter when determining if something is infringing.

No, whether it was "sold" or "distributed for free" doesn't matter when determining if something is infringing.

"What about Fair Use?" Fair use is an affirmative defense. That means that you acknowledge you are infringing, but it's an allowed type of infringement. It's still an infringement, you just don't get punished for it.

But, as already stated, nothing is infringement until there's a distribution. Without a distribution, no further analysis is needed. When a distribution occurs, it is the distribution that is analyzed to determine if it is infringing, and, if so, if there is a fair use defense. Everything that happens prior to the distribution is irrelevant when determining if an infringement has occurred, as long as the accused infringer acknowledges they have the copyrighted work (which AI companies always acknowledge).

There is one further step, because it is illegal to make a tool that is for copyright infringement. The barrier to prove this is so high, though. As long as a tool has any non-infringing uses--and we must acknowledge AI can generate non-infringing responses--then it won't be nailed with being a "tool for copyright infringement". This has to be, like, "Hey, I made a cracker for DRM, it can only be used to crack DRM. It literally can't do anything legal."

Even video game emulators haven't been hit with being "tools for copyright infringement" because there are legitimate uses for them (personal backup, archival, etc.), even though everyone knows they're 99% for infringement.

-

@christianschwaegerl @JeffGrigg @jamie @fsinn @pluralistic You're making a bunch of different arguments now. The topic at hand was, "Is it copyright infringement to make and have an AI model trained on millions of books?" The answer is no. This is wholly legal.

Storing copyrighted work is legal.

Modifying copyrighted work is legal.

Storing modified copyrighted work is legal.

It doesn't matter if they have a model that is literally just plain text of every book, or if the model is a series of mathematical weights that go into an algorithm. It's already legal to have and modify copyrighted works.

What becomes illegal is reproducing and distributing copyrighted material.

No, whether it was for "commercial" or "non-commercial" purposes doesn't matter when determining if something is infringing.

No, whether it was "sold" or "distributed for free" doesn't matter when determining if something is infringing.

"What about Fair Use?" Fair use is an affirmative defense. That means that you acknowledge you are infringing, but it's an allowed type of infringement. It's still an infringement, you just don't get punished for it.

But, as already stated, nothing is infringement until there's a distribution. Without a distribution, no further analysis is needed. When a distribution occurs, it is the distribution that is analyzed to determine if it is infringing, and, if so, if there is a fair use defense. Everything that happens prior to the distribution is irrelevant when determining if an infringement has occurred, as long as the accused infringer acknowledges they have the copyrighted work (which AI companies always acknowledge).

There is one further step, because it is illegal to make a tool that is for copyright infringement. The barrier to prove this is so high, though. As long as a tool has any non-infringing uses--and we must acknowledge AI can generate non-infringing responses--then it won't be nailed with being a "tool for copyright infringement". This has to be, like, "Hey, I made a cracker for DRM, it can only be used to crack DRM. It literally can't do anything legal."

Even video game emulators haven't been hit with being "tools for copyright infringement" because there are legitimate uses for them (personal backup, archival, etc.), even though everyone knows they're 99% for infringement.

@Azuaron @JeffGrigg @jamie @fsinn @pluralistic So if somebody invents a gun that simultaneously produces soap bubbles, shooting someone is ok? I doubt it.

You’re trying to normalise LLMs with analogies of profane private behaviour. That’s fundamentally flawed.

LLMs have new characteristics, capabilities. There hasn’t been a machine before that could churn out one million versions of a novel in the style of a contemporary author or art by living creators in no time after being fed their work. -

@jamie so proprietary projects that are made with llms can be leaked legally since there's no copyright for it ?

@SRAZKVT It’s a bit more complicated than that for reasons other than copyright (mentioned in my next couple posts in the thread). TL;DR: you may still have to defend it even if they can’t enforce copyright, and they may also have other grounds for lawsuit.

-

@Azuaron @JeffGrigg @jamie @fsinn @pluralistic So if somebody invents a gun that simultaneously produces soap bubbles, shooting someone is ok? I doubt it.

You’re trying to normalise LLMs with analogies of profane private behaviour. That’s fundamentally flawed.

LLMs have new characteristics, capabilities. There hasn’t been a machine before that could churn out one million versions of a novel in the style of a contemporary author or art by living creators in no time after being fed their work.@christianschwaegerl @JeffGrigg @jamie @fsinn @pluralistic Buddy, I'm not trying to "normalize" anything, especially not LLMs. I'm telling you how the law works. I never said the law was good.

-

@christianschwaegerl @JeffGrigg @jamie @fsinn @pluralistic Buddy, I'm not trying to "normalize" anything, especially not LLMs. I'm telling you how the law works. I never said the law was good.

@Azuaron @JeffGrigg @jamie @fsinn @pluralistic It’s wide open how existing law will be interpreted and applied here, and which new laws will be created to capture the novelty of the technology. The Anthropic case is interesting. A large number of court cases will proceed and the differences between a private book purchase and an all-purpose multi-billion content production technology will hopefully be apparent to judges.

-

FWIW I'm not a lawyer and I'm not recommending that you do this.

Even if companies have no legal standing on copyright, their legal team will try it. It *will* cost you money.

Even if companies have no legal standing on copyright, their legal team will try it. It *will* cost you money.But man, oh man, I'm gonna have popcorn ready for when someone inevitably pulls this move.

@jamie It would be so weird if people think wholesale copyright violation at a global scale to train a model is acceptable. But then, what? Individual project-sized chunks of output ARE copyrightable? But then if you hoover up all of those projects on GitHub to train the next AI, including supposedly private and copyrighted AI-generated repos? That’s fair?

A bizarre situation. Goldilocks copyright. “This data is too small to copyright.” “This data is too big to copyright” “But this data is just right.”

-

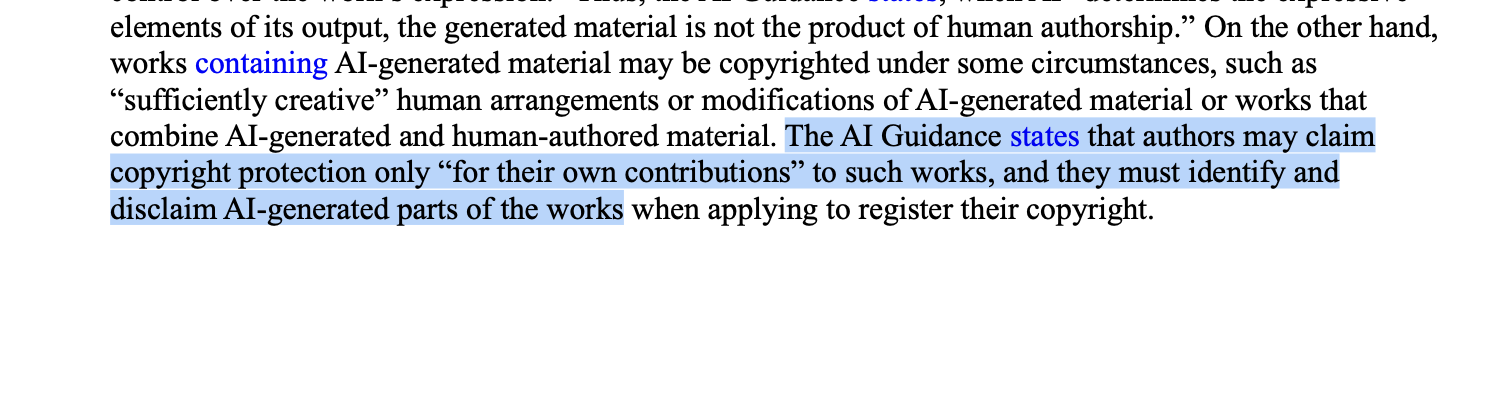

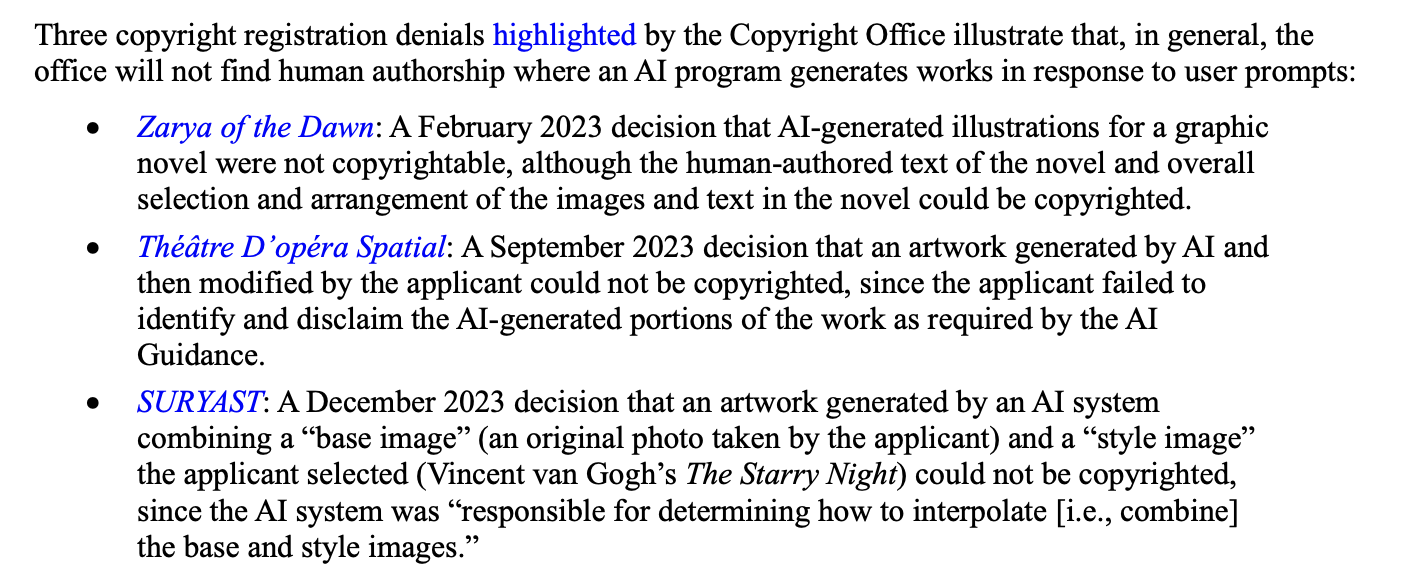

If you use AI-generated code, you currently cannot claim copyright on it in the US. If you fail to disclose/disclaim exactly which parts were not written by a human, you forfeit your copyright claim on *the entire codebase*.

This means copyright notices and even licenses folks are putting on their vibe-coded GitHub repos are unenforceable. The AI-generated code, and possibly the whole project, becomes public domain.

Source: https://www.congress.gov/crs_external_products/LSB/PDF/LSB10922/LSB10922.8.pdf

-

It'll be interesting to see what happens when a company pisses off an employee to the point where that person creates a public repo containing all the company's AI-generated code. I guarantee what's AI-generated and what's human-written isn't called out anywhere in the code, meaning the entire codebase becomes public domain.

While the company may have recourse based on the employment agreement (which varies in enforceability by state), I doubt there'd be any on the basis of copyright.

@jamie Also of note, AI booster companies like Microsoft have a specific interest in hiding how much of their code is human-written/curated, in order to overstate the usefulness of their AI tools - seems like that might come back to bite them on the ass...

-

If you use AI-generated code, you currently cannot claim copyright on it in the US. If you fail to disclose/disclaim exactly which parts were not written by a human, you forfeit your copyright claim on *the entire codebase*.

This means copyright notices and even licenses folks are putting on their vibe-coded GitHub repos are unenforceable. The AI-generated code, and possibly the whole project, becomes public domain.

Source: https://www.congress.gov/crs_external_products/LSB/PDF/LSB10922/LSB10922.8.pdf

@jamie I can imagine the law being quickly changed to come to the aid of the vibe-coders.

-

It'll be interesting to see what happens when a company pisses off an employee to the point where that person creates a public repo containing all the company's AI-generated code. I guarantee what's AI-generated and what's human-written isn't called out anywhere in the code, meaning the entire codebase becomes public domain.

While the company may have recourse based on the employment agreement (which varies in enforceability by state), I doubt there'd be any on the basis of copyright.

@jamie I would not like to be in the position of trying to explain that to a judge though.

..But I don't see the argument that because there is some AI generated code in something that it would necessarily void the copyright on the human generated code.